Long ago, I wrote about binary explosion — the practice of building a large number of assemblies to provide comparatively little functionality. Despite my protestations, I do not see this problem lessening any time soon. If anything, with the increasing prominence of package managers such as NuGet, it seems inevitable that dozens of micro-libraries will become an expected and accepted result.

I might be a lone voice in the crowd, but I still feel it is worth a little extra effort to “right-size” your project assembly footprint. There are very real consequences to slicing too finely, especially when the projects in question are developed and maintained within the boundaries of a small organization and not intended for separate consumption. To that end, I would like to share one helpful practice for taming assembly sprawl that might differ with conventional wisdom.

Recently I have been involved in building several services which have distinct lifetimes but share many core operations and run on the same servers. For example, Service A transforms and publishes data to a data folder that Service B needs to consume, and Service C reads some of the same data relevant to both A and B. While these services are all independent in some sense — as in, they could be deployed or taken down for maintenance without affecting each other — they are also coupled by data contracts and interactions with common services like the file system.

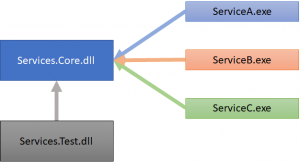

In the typical approach, these services might share several common libraries for file I/O, data contract assemblies, and so on. However, since this code was being developed by a single small team and the services shared so much core logic, I decided that a monolithic core was a good direction. Essentially this involved a single DLL with all the necessary functionality and a corresponding test assembly with lots of high quality microtests. Each service was ostensibly a separate EXE with a suitable entry point, but almost all the actual service code resided in the core DLL. The dependency graph then looked like this:

This design made sense for the context — lots of the code needed to be reused in multiple services, there was no Conway’s Law friction since all ownership was within one organization, and testability was of prime importance. As a nice side effect, the dependency graph was quite compact. The only drawback — not necessarily unique to this design — was ensuring compatibility as the core module evolved. Since all the services were rolled out independently, they each carried their own copy of the core DLL. If things worked differently in the core module between V1.1 of Service B (already deployed) and V1.2 of Service A (currently deploying), inter-service communication could be impacted negatively. The good-enough solution to this was creating a “Contracts” namespace within the core module to house the code which could not be cavalierly modified. It was a signal to everyone that code changes in that area could “break the contract” and would need extra scrutiny.

A monolithic core is not a great fit for every problem. It seems to work best for code developed, maintained, and operated “under one roof” so to speak. There is no sense in incurring the extra cost of independent microservices when something closer to a modular monolith would suffice.