Async aficionados should know by now that I/O-bound workloads based on dedicated threads simply do not scale past a certain limit. Typically, when you start creating more threads than you have logical CPU cores, you will suffer ever-increasing overhead due to context switches.

For fun, I wanted to illustrate just how bad it can get using a very naïve thread-based I/O workload. All of the code is available in the GitHub ThreadSample project. The I/O workload in my sample app is a single named pipe receiver and a single named pipe sender. The messaging technology is Windows Communication Foundation (WCF), using raw duplex channels.

The Sender class manages the WCF channel infrastructure and provides a SendAsync method to add a concurrent send worker, using either a true async implementation or a dedicated thread with sync calls (i.e. dreaded “blocking code”):

public Task SendAsync(CancellationToken token)

{

if (this.useDedicatedThread)

{

return Task.Factory.StartNew(() => this.SendInner(token), TaskCreationOptions.LongRunning);

}

else

{

return this.SendInnerAsync(token);

}

}

Here is the blocking version that eats a thread:

private void SendInner(CancellationToken token)

{

try

{

while (!token.IsCancellationRequested)

{

using (Message message = Message.CreateMessage(MessageVersion.Default, "http://tempuri.org"))

{

this.channel.Send(message);

}

this.OnSent();

Thread.Sleep(this.delay);

}

}

catch (CommunicationException)

{

}

catch (TimeoutException)

{

}

}

And here is the true async version. Note that the async and await keywords (along with Task.Delay and TaskFactory.FromAsync) make for a highly readable implementation which looks almost exactly the same as the sync version.

private async Task SendInnerAsync(CancellationToken token)

{

try

{

while (!token.IsCancellationRequested)

{

using (Message message = Message.CreateMessage(MessageVersion.Default, "http://tempuri.org"))

{

await Task.Factory.FromAsync(

(m, c, s) => ((IOutputChannel)s).BeginSend(m, c, s),

r => ((IOutputChannel)r.AsyncState).EndSend(r),

message,

this.channel);

}

this.OnSent();

await Task.Delay(this.delay);

}

}

catch (CommunicationException)

{

}

catch (TimeoutException)

{

}

}

The main app constructs a receiver, starts it in the background, and then sets up the sender. Send workers are added in a loop; each worker sends a message and waits for a fixed amount of time (100 ms in this case) repeatedly until cancellation. More send workers are added until there are 100,000 concurrently running. Then the app cancels the workload and exits.

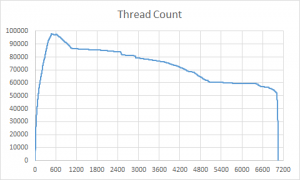

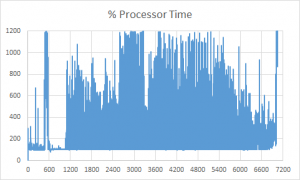

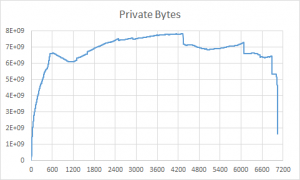

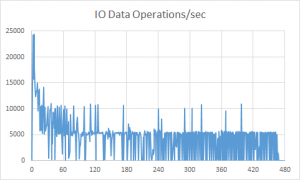

To track the performance of the app, I used a few process perf counters:

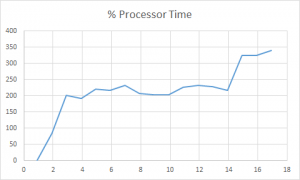

- % Processor Time: how much CPU time is this process using? (Note that this counter has a maximum value of 100.0 times logical core count; if the counter reads 250.0, you can interpret this to mean “using two-and-a-half cores.”)

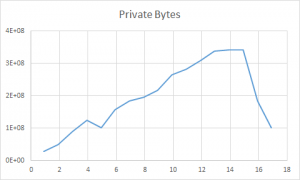

- Private Bytes how much RAM has this process allocated? (Does not include shared or out-of-process memory, e.g. shared libraries.)

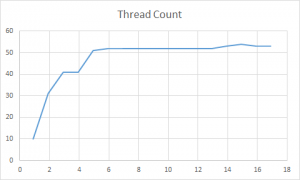

- Thread Count how many threads is this process running?

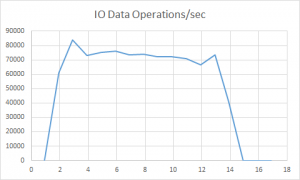

- IO Data Operations/sec: how many read/write operations has this process executed in the last second? (In this workload, we’d expect this counter value to increment on every individual named pipe send and receive operation.)

I compiled the app in Release mode (very important for fair performance comparisons) and ran it on my relatively powerful machine (24 GB RAM, 6 cores with hyper-threading = 12 logical cores). I did two different experiments, one using dedicated threads and the other using async tasks. The results, while within the realm of expectations, are still a bit surprising.

Dedicated threads

Yeah, threads don’t scale, so this workload is not likely to do so well. But just how big that “don’t” is surprised me. First, the app took about eight minutes just to create all the threads — this was in a tight loop only calling SendAsync and adding the Task to a list (I’m kind of amazed it even let me create that many threads in the first place!). After cancelling the tasks, the app then took nearly two hours to destroy all the threads it had created and clean everything up. At this point, there was no useful work happening besides cleanup/shutdown logic since the I/O operations had stopped shortly after all the threads were launched. The amount of memory usage was staggering — 6+ GB near the start, peaking at almost 8 GB halfway through the shutdown process. After about half the threads were spun down, the rest were destroyed relatively quickly. The charts below illustrate the situation in shocking detail.

Async tasks

The async workload performed far better. It was so quick to add all the send workers this time that I needed to artificially slow down the app to get it to run for any appreciable length. I settled on a delay of 1 ms every 100 workers, which allowed it to run for about 18 seconds. The first big difference is thread counts — with 100,000 concurrent sends at peak the async version stabilizes at about 50 threads. The I/O throughput is much better, 70K-80K operations per second which translates to about 35K-45K messages per second. The cores are much less busy, the expected case for an I/O-heavy workload. Memory is also reasonable — never exceeding 400 MB total — considering the number of object allocations for each send worker.

Conclusion

If you need to scale an I/O workload, async is the obvious way to go. While this is a contrived example, the differences are quite clear and show the efficiency of standard .NET async I/O patterns even without heavily performance-tuned code.

Pingback: SSD and … sync I/O? | WriteAsync .NET